University of Zurich Researchers Conducted an AI Persuasion Experiment on Members of This Online Community, Without Consent

In March, the volunteer moderators of the Change My View subreddit learned that researchers at the University of Zurich had been covertly conducting an experiment on their community members. By injecting AI-generated comments and posts into conversations, the researchers had wanted to measure the persuasiveness of AI.

There was one big problem: They didn’t tell community members that they were being experimented on. They didn’t tell the community moderators. They didn’t tell Reddit’s corporate team. Only when they were getting ready to publish, did they disclose their actions.

It then became clear that beyond the lack of consent, they had engaged in other questionable behavior: Their AI-written contributions had spanned multiple accounts, pretending to be a rape victim, a trauma counselor focusing on abuse, a Black man opposed to Black Lives Matter, and more.

Community response was swift: Overwhelmingly, members were unhappy. The moderators insisted the research not be published. Reddit threatened legal action. Initially, the researchers were defiant but eventually, they apologized and pledged not to publish the research.

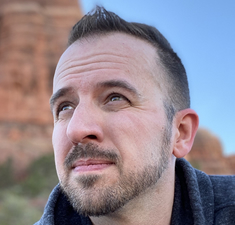

Change My View volunteer moderator Logan MacGregor joins the show to discuss what went on behind the scenes, plus:

- The danger of publishing the research

- Reaction to the apology

- How AI is going to challenge the idea of trusting an online community

As

As