Elon Musk’s presence has loomed over Twitter since he announced plans to purchase the platform. And for these few weeks that he’s been in charge, many concerns have proven to be justified. Musk laid off 3,700 employees, and then 4,400 contractors. He is firing those who are critical of him. The verification process, perhaps one of Twitter’s most trusted features, has been unraveled. He’s offered severance to those who don’t want to be part of “extremely hardcore” Twitter. Following the results of a Twitter poll, he reinstated the account of Donald Trump, who was suspended from the platform for his role in inciting the January 6th attacks.

So, what happens now? What of the many social movements that manifested on Twitter? While some movements and followings may see new manifestations on other platforms, not everything will be completely recreated. For example, as writer Jason Parham explains, “whatever the destination, Black Twitter will be increasingly difficult to recreate.”

[00:04] Announcer: You’re listening to Community Signal, the podcast for online community professionals. Tweet with @communitysignal as you listen. Here’s your host, Patrick O’Keefe.

[00:00:21] Patrick O’Keefe: Hello and welcome to Community Signal.

On October 27th, Elon Musk took control of Twitter, completing the $44 billion acquisition he tried to get out of. Since then, the changes have been many; executives leaving or being fired, thousands of employees and contractors being laid off, and of course, changes or announced changes to how the service approaches moderation, trust and safety. I don’t think I know a community moderation trust and safety pro who isn’t critical or even horrified by the shift for a platform already worthy of criticism in this area.

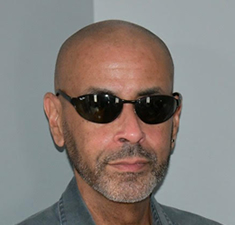

Let’s talk about it with an incredible panel featuring Omar Wasow, co-founder of BlackPlanet and professor at UC Berkeley, who studies protests, race, politics, and statistical methods, Ralph Spencer, who built AOL’s child sexual abuse material database by personally reviewing more than 98,000 images, and Sarah Roberts, a professor at UCLA who studies content moderation and authored 2019’s Behind the Screen about the behind-the-scenes challenges faced by content moderators on the commercial internet.

But first, a big thank you to our Patreon supporters, including Serena Snoad, Maggie McGary, and Marjorie Anderson, whose encouragement empowers us to do episodes just like this.

I also have some bittersweet news to share. After five years, this will be Carol Benovic-Bradley’s last episode as Community Signal’s editorial lead. Carol is a good friend and everything is great, it’s just time to bring this chapter to a close. Thank you to Carol for all of the amazing contributions that you have made to the show and for all of your kindness and support over the years. Your voice will be missed around here.

Let’s introduce our guests!

Omar Wasow is an assistant professor in UC Berkeley’s Department of Political Science. His research focuses on race, politics, and statistical methods. Previously, Omar co-founded BlackPlanet.com, an early leading social network, and was a regular technology analyst on radio and television. He received a PhD in African American Studies, an MA in government, and an MA in statistics from Harvard University.

Ralph Spencer has been working to make online spaces safer for more than 20 years, starting with his time as a club editorial specialist or message board editor at Prodigy, and then graduating to America Online. During his time at AOL, he was in charge of all issues involving Child Sexual Abuse Material or CSAM. The evidence that Ralph and the team he worked with in AOL’s legal department compiled contributed to numerous arrests and convictions of individuals for the possession and distribution of CSAM. He currently works as a freelance trust and safety consultant.

And Sarah Roberts, a professor at UCLA. She holds a PhD from the iSchool at the University of Illinois at Urbana-Champaign. Her book on commercial content moderation, Behind the Screen, was released in 2019 from the Yale University Press. She served as a consultant too and is featured in the award-winning documentary The Cleaners. Dr. Roberts sits on the board of the IEEE Annals of the History of Computing, was a 2018 Carnegie Fellow, and a 2018 recipient of the EFF Barlow Pioneer Award for her groundbreaking research on content moderation of social media.

Omar, welcome back.

[00:02:56] Omar Wasow: Thank you for having me.

[00:02:57] Patrick O’Keefe: Ralph, good to have you again.

[00:02:59] Ralph Spencer: Thanks for the invite, Patrick.

[00:03:00] Patrick O’Keefe: Sarah, welcome for the first time.

[00:03:02] Sarah T. Roberts: Thank you. Thanks for having me today.

[00:03:04] Patrick O’Keefe: Elon Musk has made a point to say that, this is his words, “Twitter’s strong commitment to content moderation remains absolutely unchanged.” What he really means, in my view, in true troll fashion, is that the words that Twitter has on the pages that detail their community guidelines and their policies haven’t changed, but obviously, pretty words on a page are just one part of this work and the larger part is having people to actually execute upon those pretty words.

One of his first official acts was to fire Vijaya Gadde, who was Twitter’s legal public policy and trust and safety lead. Musk followed by laying off 3,700 people, approximately half of Twitter’s staff, and when that happened, there was concern that the trust and safety team was hit in a big way right before a major US election. Yoel Roth, then Twitter’s head of trust and safety, insisted publicly this was not the case, that it amounted to 15% of the team. In other words, they had been spared the cuts that some other teams had seen. Within a week, Roth had resigned, and a few days later, Twitter had laid off around 4,400 of its 5,500 contract workers with cuts expected to have a significant impact to content moderation according to Platformer‘s Casey Newton.

Sarah, you tweeted about how these contractor counts are where large platforms like Twitter hide most of their content moderators earlier this year, you’re unique in this group because you spent time as a researcher at Twitter, so you have ex-colleagues that I’m sure you’re talking to. What are you hearing right now?

[00:04:33] Sarah T. Roberts: Yes, I have perspective in a couple of ways. Certainly wasn’t the plan going into Twitter. At the beginning of the year, I took a leave of absence from my job as a professor at UCLA where I study content moderation and I’ve been doing that research for almost 13 years. I went into Twitter to work on the issue of improving upon the conditions of content moderation as they present themselves in the tooling that the moderators at Twitter used. They’re called “agents.” That’s the term of the art at Twitter.

Things got weird really quickly. Elon Musk started making overtures just fairly early on in the beginning of the year, in spring. Despite what you commented on, his initial stated goal, at least one of the primary goals he had was to seemingly eliminate content moderation as a practice from Twitter. Anybody with any clue, whether inside Twitter at that time, or an industry watcher of any sort, or having any association professionally with social media, knew what an impossibility that was in many directions. If for no other reason, than to meet baseline legal mandates that are in place in many places around the world. Perhaps not so much in the United States, but certainly in other markets as it were.

From my perspective, and from that of my immediate colleagues, our reaction was that he had no clue what he was talking about. I would stand by that assessment. Either he was lying and misleading the public or he had absolutely no sense of scope, and the necessity and the intertwined nature of content moderation throughout the business of social media in its commercial form, or both. I would say it’s both. He continued to talk out of both sides of his mouth when it became clear that he was about to completely tank the actual revenue-generating part of Twitter which was advertising.

Here we are today, we can presume that there have been massive cuts to the actual production, the frontline production content moderators, who would find themselves among that number of contract employees. They come in from third-party companies that Twitter outsources the work to. As you also mentioned, importantly, there were cuts in the first wave of firings that went to people in legal, people in policy, people with the technical skills that are required to implement systems to even do the work that we’re talking about. These cuts are across the company.

Those people who were until very recently on the inside, are the ones who are in the know, and who are telling me, look, you can pretty much start counting down from any moment you care to pick, right now is a good one, to the point where systems will start breaking, there will be no one around with any kind of knowledge to fix them, and meanwhile, you’re going to lose the ability to really control the influx of the kind of material that any player in this marketplace is beholden in some regards or at least considers good business practice to keep off the platform. Business genius? I don’t know. [chuckles] It doesn’t seem like it. Not at least in this regard.

[00:08:03] Patrick O’Keefe: So, because I want to talk about these layoffs, and the staffing, and what it means, but when I first heard about this, I always see the worst outcome of things. It’s the curse of this work, I think. As we were talking in the lead up to this Ralph, as someone who built AOL’s CSAM database and had to review so many images and examples of CSAM, when you hear about the numbers of people being let go at Twitter, what worries you the most?

[00:08:28] Ralph Spencer: I don’t know where to begin. There’s no way possible with the cuts that he’s made that he’s going to be able to do any type of content moderation. I’m so frustrated. I don’t even use Twitter and I’m so frustrated with that, I can’t even think of a good analogy. The only thing I can think of is that line that I quoted to you in the e-mails that we had back and forth from the movie, Wall Street, when the Martin Sheen character comes in and says, “And there came into Egypt a pharaoh who did not know.” This man does not have a clue about what he’s doing.

I not only worked in dealing with the issues involving CSAM, but I also worked well, yes, in content moderation, but also worked in legal compliance. That’s part of it, going to be part of the content moderation when you know somebody does dirt that the content moderators, however few of them are there, will eventually pick up on something that’s illegal, and the cops are going to come knocking upon Musk’s door asking for that person’s identification and his membership information, and stuff like that. He isn’t going to even have anybody there who remotely begins to know to how to do that type of stuff.

I will end by saying this. I’m on a job list that Google sends out and I get it once or twice a day. They sent me one just before I got on here. I don’t know how accurate it is but Twitter, they actually had a posting for a senior manager for trust and safety at Twitter, just went up today.

[00:09:55] Patrick O’Keefe: Are you not applying?

[00:09:58] Ralph Spencer: [laughs] It’d be about the same as my opinion of going to work for Facebook. I don’t think you can pay me enough money to go in there to try to clean up that mess. It’s an impossibility. I saw a picture the other day in one of the Washington Post articles that I was reading on this, and it had one of the senior trust and safety officials, I think it was, she was asleep in a sleeping bag on the floor in her office.

[00:10:23] Patrick O’Keefe: Yes, it’s a product person, but yes.

[00:10:24] Ralph Spencer: Well, okay. Now when I started at AOL, they were so backlogged that they were hiring folks to do, back then it was called the terms of service group. It eventually morphed into something called Community Action Team or CAT because corporations love their initials and whatnot. We had so much stuff backed up that the rule was the guy managing the team said, “Look, I don’t care how much overtime you work, I just don’t want to see people falling asleep at the terminal, so you can do however much you can, but go home at some point.” I don’t think this is going to be the case at Twitter.

[00:10:59] Sarah T. Roberts: Well, I was just going to add to these comments. First of all, spot on, I don’t think anybody needs to be sleeping on the floor as a badge of honor. That looks like a management failure to me, so we’ll start and end with that right there.

[00:11:11] Ralph Spencer: [chuckles] Exactly.

[00:11:13] Sarah T. Roberts: Maybe if you hadn’t canned everybody, you wouldn’t be sleeping on the floor, but here’s what I want to do, I want to lay a challenge down on the line. I want Elon Musk to spend one day as a frontline production content moderator, and then get back to this crew about how that went. Let us know what you saw. Share with us how easy it was to stomach that. Were you able to keep up with the expected pace at Twitter? Could you deal with and execute on and make good decisions over 90% of the time, over 1,000, 2000 times a day? Could you do that all the while, while seeing animals being harmed, kids being beat on, child sexual exploitation material, as Ralph alluded to?

As you said, I will second what you said. He’s got no clue, he has no idea what he’s dipped his toes into, he’s no idea what the Pandora’s box is that he has left open. Unfortunately, the consequences are not just solely going to rest on his shoulders. The whole of us are going to deal with this for some time to come, but I throw that challenge out because the blithe self-assurance with which he operates needs to come into contact with reality and it needs to happen soon.

[00:12:20] Patrick O’Keefe: Omar, as we were talking through these early points, I was thinking about social networks like Ralph mentioned Facebook, we’re talking about Twitter. As someone who co-founded a social network before, [chuckles] not only Elon Musk bought one, but certainly, before Twitter existed, before Facebook, and one that grew to be exceptionally popular in this space, can you talk a little about the responsibility that you felt in managing the platform as opposed to what you see as an active Twitter user, as someone who’s observing these things from afar right now?

[00:12:49] Omar Wasow: Well, I want to echo the earlier points from Sarah and Ralph. Like, I think one of the first things that really stood out to me was just how naive Musk’s comments were in regard to an enthusiasm for an abstract principle like free speech and no real sense of what that means in practice. Of course, there are multiple dimensions to it, one, of course, is just legal, like Twitter is a private service, so it’s not subject to the First Amendment is about protection from government intervention and speech.

There’s just at some level, there’s just like a mashing together of concepts, but even if you leave that aside and there’s just a general principle of wanting to support speech, as this was unfolding, I was thinking about a very simple case early on in our days at BlackPlanet where somebody registered with the last name Bocock, and we had a filter that prevented you from using curse words in a name, right? It was just like, it was a very crude text filter, and like there’s not an obvious easy answer to that, right? Do you allow that person through, but then you have to like manually try to adjudicate these things, or do you just have a blanket rule where somebody with a genuinely ambiguous name, in this case, you know? That’s like the dumbest, simplest case, and it speaks to how you can’t just have a simple principle that you can apply universally and have it just work as he seemed to think it would. Then you get into other dimensions of it, which are, I like the title of a book by, I think it’s Larry Lessig, Code is Law, and so much of what actually is the experience of an online community is encoded in all sorts of rules and algorithms and tools.

There are ways Twitter could be more tolerant of terrible speech if it had much better tools for allowing me to just subscribe to some kind of mass blocking tool where it’s like, okay, I can effortlessly filter out all of these jerks and they can be noisy, but be like, I’m not exposed to them because we have good tools for allowing that. Here’s the other detail, right? If you look at the First Amendment, freedom of association is part of freedom of speech, and so what’s really important in an online community is having some capacity not just to express yourself but to manage who you interact with. Twitter doesn’t have very good tools for that, right? It’s very cumbersome to have to manage who you interact with and so there aren’t ways in a more sophisticated suite of tools that you could allow for more heterogeneous, here’s troll Twitter and here’s a more intimate safe Twitter, but you would need a much more robust set of tools to do that and it doesn’t have it and nothing Musk has said reflects any understanding of that level of nuance at just multiple levels, the concepts, at the practical level of how does this work, actually have thoughtful engagement. Everything he said has had the quality of good bumper stickers but totally divorced from as I said, reality, and that doesn’t bode well, obviously.

One other thing I would say, and I’m getting a little far away from your question but it’s taking me – in the debates on Twitter, one friend really pushed me and I think there is this ideological dimension to what Musk is motivated by, so like these messages between he and I guess an ex-wife about how this right of center satire site, the Babylon Bee got, I forget what happened but they were muted or whatever, suspended briefly. That was somehow so mobilizing that it combined with other things we’ve seen him say, you know, support the Republican party or this cryptocurrency FTX funding, secretly funding the Demo-, I mean just all weird conspiracy stuff.

You just have a sense of him as having gone off the deep end of a certain dark web conspiracy theory madness. To have somebody who seems just like practically divorced from reality running the whole site is unsettling.

[00:16:54] Patrick O’Keefe: It is.

[00:16:55] Omar Wasow: [laughs] I’m just laughing. The story we’re telling you does not bode well.

[00:16:59] Patrick O’Keefe: It doesn’t.

[00:16:59] Sarah T. Roberts: I can relate to Omar’s desire and the desire of others to try to psychoanalyze what’s going on because what else can we do in this moment? It reminds me very much of a period of time that we were in for about four years and continue to be dealing with in terms of asking questions about who hurt you as a child or what’s going on with you internally so we can understand behaviors, but at the end of the day, it’s an impossible task and we’re left with the behaviors and the actions and the outcomes, all of which I think are worrisome at the very least.

This person has indicated a disdain for rules and terms of engagement that were set up by Twitter, presumably thoughtfully over time, and with the notion, at least nominally, of inviting a climate that would allow the most number of people to participate while also creating an environment that was hospitable to its actual clients who are advertisers. I think of that as a balancing act or as something of almost like a valve, and each site, each platform, each brand comes up with its own recipe and its own determination of where it’s going to fall along those lines.

Twitter was fairly permissive with regard to many things. For example, adult consensual content flourishes on that site. It had a good enough algorithm that I didn’t come across it particularly, but for those who enjoy that material and want to engage with it, is freely available on the site. That’s something that other companies have decided to ban or disallow. That’s just one example of how sites vary in terms of where they draw the line on content.

Now, interestingly, we have a person who is on the record as wanting greater speech in theory but has also paradoxically now become the content moderator in chief. In fact, what we’re seeing in terms of site policy seems to largely fall down around him becoming embarrassed and making new rules about what one can and cannot say with regard to his own self-image. No making fun of Elon Musk’s name unless you indicate it’s a parody in your name on Twitter, just a whole bunch of bizarre things.

Back to Omar’s point, like are we seeing the unraveling of an individual who is in a great position of power right now? I mean that may well be or he may just have always been that way and we’re getting a little too up close and personal, but the bottom line is that I think one thing that we are seeing in real-time is what a danger there is in having one individual, especially a very privileged individual who does not live in the same social milieu as almost anyone else in the world, one very privileged individual’s ability to be the arbiter of these things and these notions, these profoundly contested ideological notions of something like free speech which again is continually misapplied in this realm.

[00:20:12] Ralph Spencer: To piggyback on what Sarah was saying, the example that Musk is supposed to be running the company, and he did mention something back when he got on board about creating a content moderation staff, some sort of executive-level content moderation staff. Meanwhile, he’s running around retweeting that story about Nancy Pelosi’s husband, the false article about what happened between him and his attacker. What kind of example is that to set? Again, it just becomes extremely frustrating to think about this situation sometimes. I don’t know what to say about the whole situation. The more I think about it the more ridiculous it becomes.

Basically, what it is to me is like this kid who has way too much money and he found a new toy he wants to play with.

[00:20:59] Omar Wasow: Yes, I had the same thought where it’s like we all make impulse buys, but in our case, it’s like you bought a Snickers bar, and in his case, he’s so rich, he impulsively buys this global town square.

I was thinking Patrick, and something Sarah said, just triggered an incomplete thought that I was working on earlier, which is the ideological project is in some ways is only half of what matters. If you’re somebody who’s running a site, I think there’s a certain kind of disposition whether you’re on the left or the right, which is you have to have a small D democratic personality, which is to say like you really have to be comfortable with a thousand voices flourishing, a lot of them being critical of you and that’s not something that you take personally.

I think there’s a kind of person, a kind of leader who really can sit with critical feedback and listen to diverse voices and not have it feel like an attack on their ego. The point about the ideological project is not so much that it’s a problem that he seems very radicalized by certain right-of-center issues. It’s more that he seems unable to occupy a position that’s really ecumenical and open to a wide range of views on a site like Twitter that’s really antithetical in some ways to the ethos of the site.

[00:22:21] Sarah T. Roberts: Building on this notion of how free speech is being operationalized versus maybe theorized in this space, I think we really have to indict more broadly the commercial social media industry as it stands today in the following way. First of all, the conflation of this notion of free speech as it’s enshrined in the US Constitution, again, is very specific, we’ve articulated that, and yet these are companies that have endeavored to be global and outsourced if you will or export this very peculiar, very particular, very Silicon Valley, implementation of that notion.

While that might sound great in principle, I think we need to actually apply the more critical lens to that and say, “Okay, when the companies talk about free speech, what is it that they’re actually saying? What does that mean in practice?” I think one of the things that’s happening with Musk is that he’s really laying bare this particular self-serving implementation of that notion in the sense that he’s lacking a certain amount of finesse that some of his peers or his aspirational peers have demonstrated.

When Silicon Valley talks about free speech, what is actually being talked about is a level of reward without responsibility. What I mean by that is when you declare that your product, your site, your platform, your service is a free speech zone, there is always going to be a limit on that speech. It may be imperceptible to the average user who’s not going to bump up against it, but we had the terrible at the lead, at the top of this conversation, the terrible example from Ralph who’s saying open up a social media site and get ready for the child abuse material that’s going to come over this site.

That is the most extreme example that we can come up with but that is content moderation. To remove that material, to disallow it, to enforce the law means that there is a limit on speech and there ought to be in that case. If there’s a limit on speech, it is by definition not a free speech site. Then we have to ask, well, what are the limits and who do they serve?

When the companies talk out of both sides of their mouth, what they’re doing is selling this notion of free speech that everyone can read themselves into, while at the same time, telling their advertising partners and everyone else, “Look, we’ve got limits and we’re going to enforce them to our benefit and to your benefit.”

That’s really a ploy, a get-out-of-jail-free card in essence, where they get to retain all the power to make the rules, all the power to do that under the shroud of darkness and in the background, they give us a friction-free experience for the most part of content moderation where most users don’t encounter it and so they don’t perceive of it, and yet they do everything for their own benefit in terms of managing their relationships.

When I hear people invoke free speech on a for-profit social media site, I say, not only does that not exist today, it never has existed and it never will exist. Let’s deal with what reality is actually giving us and talk about that instead of these fantasies that actually are pretty much not good for anyone involved.

[00:25:45] Ralph Spencer: Well that’s a point right there. I’ll have to piggyback on what Sarah said right there. Now, Musk came in here riding in on his great white horse talking about how this was supposed to be the free town square and there’s going to be free speech and all this stuff. Let’s not forget the main thing, Musk is in this to make a buck. All the stuff about free speech, hey, he said it, you know it’s BS. He’s in it to make a buck and that’s what the whole thing is about so that’s what I’m wondering how all that’s going to work out now.

The other thing, piggybacking on something else that Sarah was saying, Section 230, they’ve still got, all of these companies still have Section 230 that they can hide behind. Anything that goes wrong, they can just run and hide behind Section 230 as long, my understanding of the law is for the entire 20 years I worked at AOL is as long as you don’t know about it, you don’t have to worry about it. Unless somebody reports it to you, do what you will.

Now, I can say that the people that I worked with took the situation that I dealt with seriously enough is that we were more proactive about dealing with the problem. That’s how I ended up having to build a database of 98,000 hash files from actual images because we actually went out and scanned for the stuff. Not everybody did that. We did make that database available to other online companies through this consortium that we were in, that AOL was in at the time with NCMEC, the National Center for Missing and Exploited Children.

A company called United Online was in with us, as well as Verizon and Google. From what I can remember, United Online or Yahoo came in there then. Yahoo, United Online, and Verizon took the database that we offered. I don’t think Google went with it at the time. I don’t know if it’s still there and if they’re still doing it, but I know that Verizon actually took it for three separate products that they had, but again, it’s being proactive. I’m thinking if Musk knows anything about what’s going on, somebody’s probably told him about Section 230. That may be the first thing he thought about when he thought, “Oh, well, let me run over here and buy this because it’s not going to be a problem. I’m not going to have to deal with this.”

I read another quote where one of his lawyers the FTC is starting to, the Federal Trade Commission is starting to breathe down his neck, and not soon enough. One of his lawyers or one of his yes men said basically “Elon puts rockets into space, he can deal with the FTC.” Well, it’s a whole big difference from trying to deal with stopping a riot and somebody trying to overthrow the government as opposed to either putting a rocket into space or trying to figure out how to make a car battery last longer.

[00:28:31] Patrick O’Keefe: I want to talk about verification a bit just because I see it as a trust and safety feature.

[00:28:35] Ralph Spencer: Ah, yes, let’s, please. You got eight bucks?

[00:28:38] Patrick O’Keefe: Impersonation on Twitter has always been an issue. To combat that, Twitter launched verification once upon a time. You can make an argument, in my view, that verification was probably one of Twitter’s more popular, more successful features. Not perfect, had issues, but pretty solid in how they did a good job of establishing that the blue check meant with a reasonable degree of certainty that the account was who it claimed to be. Musk has gutted verification offering blue checks to anyone, as Ralph said, that has $8 without vetting, and as pretty much everyone predicted, you didn’t need to have done any of this work before to predict that it would lead to an increase in impersonation on the platform and that’s what it did.

While there is this undercurrent of jokes and parody, there is also this real great harm that it presents and I’m sure has already played out in the DMs and in other areas we can’t see as well as on the public site because people have been conditioned to trust the checkmark. The only place online I’ve posted an explainer right now on this was on Facebook because the people I’m connected to on Facebook are older people in my life who are not as read in on the minutiae and drama of what’s happening right now. Not to say they were super into Twitter, but they are more likely to trust that willingly and just with no other context. I was like, hey, no more. You got to pay attention to what you’re looking at over there with the checkmarks.

I think, for me, one of the more troubling examples I can come up with is governments and government officials. After Washington Post columnist Jeffrey Fowler was able to impersonate Massachusetts Senator Ed Markey in just minutes, Senator Markey sent a letter to Musk asking some questions about Twitter’s processes, and Musk, the troll, responded on Twitter by saying that it was “perhaps because his real account, Senator Markey’s account, looked a parody,” before also commenting on the fact that his profile picture has a mask on and asking why his PP, shortcut for profile picture, had a mask on it. He’s a troll as I said, obviously, but the harm caused by these verified accounts is real. Sarah, you’re the only one on the panel who’s actually verified. How much did you pay?

[00:30:38] Sarah T. Roberts: $0 and no cents.

[00:30:40] Patrick O’Keefe: For clarity, what was the process when you were verified?

[00:30:43] Sarah T. Roberts: There was a mechanism by which you could apply for that on the website. There was a verification website at the time. My understanding was that when I did apply, there was a pause on the program, but I put it anyway, and I was verified. This was before I had spent any time at Twitter like really knew much about what went on inside, not that I know that much about that process from the inside angle anyway, but as an anecdote of a very silly simple way that identities were abused and how that verification process was used to combat that, I have a friend who’s a professor in the Midwest, I don’t want to get too much into the details, who experienced somebody creating an account exactly with one character difference in the username and using his profile picture, his exact bio, totally copying everything about his profile for reasons unknown.

There are many reasons that people do that, and I think a lot of the reasons is to start a financial scam. That’s one of the reasons that people would do that, a more mundane reason from some of the other scary things that people might do. He appealed to Twitter and said, “Look, somebody’s impersonating me. First of all, can we get this account banned? Secondly, can I be verified?” Both of those things happened. His options were leave Twitter and be freaked out, or stay on Twitter keep, keep engaging, keep contributing and do so with a certain level of security in place and also to let people know if you see an account that doesn’t have this, it’s not me. That was an experience that I think he would admit it’s not super high profile, that’s not something he endeavors to do, so the whole thing was weird.

In terms of what makes Twitter different or special from other platforms, I think we can all agree that Twitter, in terms of user base and in terms of things like just simple numbers of how many people worked there prior to the canning of everyone, it had a fairly small footprint compared to other players at its echelon. However, it has outsized social impact, whether it’s in the political arena, whether it’s in social movements, whether it’s in celebrity usage, all of these things have been true.

In terms of political movements, the good, bad, the ugly, we saw an insurrection against the United States launched by the president of the United States on Twitter, so it’s not all rosy, but the point is that Twitter had this outsized power and part of that could be attributed, I think very fairly, to this verification process that let a lot of high profile folks, prominent individuals, media organizations, other kinds of people in the zeitgeist or in the public eye, engage with a certain sense of security.

What did alleged business genius Elon Musk do? He came on the platform. First of all, he told everybody it was going to be $20 a month for everyone and anyone to get this verification badge. Stephen King, noted famed author, turned around and said, “Hey, buddy, you should be paying me to write on your platform, not the other way around. You’re nuts if you think I’m going to pay you $20 a month.” Musk immediately undercut the value that he had ascribed into this badge at $20 a month. He said, “How about $8 a month?” Undercuts it by over 50%, money going out the door all the way.

Again, as we’ve all said, ostensibly, the guy’s goal in owning this platform other than just having a toy for a very, very rich person with arrested development was to make money because that’s what he’s all about, and yet he essentially devalued one of the most arguably valuable or at least special features, unique features of Twitter before our very eyes and has really made it in many regards useless. The utility of it has been completely undermined and folks have been taking a lot of humor out of that, but also, as you said, it is being gained.

Somebody had a fake Eli Lilly account the other day and said very righteously, I might say, “Hey guess what, insulin is now free,” and the stock took a nosedive. That’s going to make some people irate, the kind of people that Elon Musk doesn’t want to tick off, major corporations. I guess he thinks the FTC is a joke, but like you said, the FTC, they’re not a joke. They’re here to be real.

[00:35:09] Patrick O’Keefe: Omar, I want to ask you about social movements but I actually wanted to ask you about something you commented on Twitter about the $8 charge, which was that it felt like a protection racket. How did you mean?

[00:35:18] Omar Wasow: Two quick comments. The first is that typically, somebody who’s running a club and VIPs come to the club, the VIPs get in free because there’s cache that– there’s a spillover effect of having celebrities and models and VIPs at your club and so you do everything you can to get those people in. Verification was one way, as Sarah noted, of having a VIP room in a way that encouraged those folks to show up.

The first thing that’s just sort of weird about Musk’s model to me is that it inverts in some ways the core value of what that check did which was to attract people who, like Stephen King, create the content that other people show up to consume in significant part. It’s like having a club where you charge the VIPs and you let everyone else in free. It just doesn’t make a lot of sense.

Then to the point about the protection racket, the way he was talking about it, you would see this in his replies to people where he would say, you want to be critical? Sure, but pay $8. Particularly in the beginning when he was talking to people who had the checkmarks, there was a sense of, you got this thing and it would be really terrible if you lost it, but you can pay me $8, and it was almost as if there was this implied threat to the people who were in some ways there, the most favored members of Twitter, and he backed off of that threatening the VIPs.

I think one of the really big puzzles here across all of these decisions is in theory, yes, he’s a businessman who’s trying to make a profit, but in practice what we see is decision after decision that is kicking the legs out from the business. Making it a less safe place for advertisers, having Eli Lilly lose billions in the stock market. These are not things that are going to help.

Then the subscription service that they did offer, this is the other part about the protection racket is I think there is a subscription business to be had on Twitter, that like if they had a good bundle of value, that’s something that I and others might pay for. But instead of saying, here’s this set of tools that are really helpful for your communication expression and publishing and managing all of that, it was more like, you want to be here, you got to give me $8 and if you don’t, there’s this implied threat.

[00:37:41] Ralph Spencer: Actually, I was just going to comment on how that Eli Lilly probably cost, well basically didn’t probably, it cost Twitter quite a lucrative advertising account because Eli Lilly walked away and they were the latest, I think, in a long line of folks who are just running away from Twitter since Musk took over. Again, the whole thing about him making money, I don’t see how this works out. He can’t figure out what he’s doing.

[00:38:07] Patrick O’Keefe: Sarah mentioned social movements. Omar, I’d be remiss given your study of protests. One thing I’ve heard expressed several times, as I’m sure we all have, is just how Arab Spring, Me Too, Black Lives Matter, and other movements manifested on Twitter. The question I hear is, could that happen on Elon Musk’s Twitter? What’s your take on that? Is that a possibility? What do you think?

[00:38:27] Omar Wasow: I think that in the current version of Twitter, which is to say, mid-November 2022, I think it could. I don’t think we’ve seen the underlying infrastructure degrade so much or his very capricious moderation affect some of the core infrastructure, which is to say if there were a protest, heaven forbid, someone gets unarmed person gets shot in the United States tomorrow, that’s caught on video and that would get circulated on Twitter, I don’t see evidence right now that that kind content is being filtered or that the emergent movement that might form around that would be blocked, but I have no optimism or no confidence that that’s going to be the case going out months or years.

That works out on a couple of different levels. One is, I think we have good reason to fear that the infrastructure is going to get considerably worse over time. He’s fired enough of the people. In some ways, we think of Twitter as like, oh, it’s a Silicon Valley company, but in a lot of ways, it’s like a telephone company. It’s got a lot of boring infrastructure that has to maintain so that it’s reliable. He’s taken a bunch of these pillars or blocks in the Jenga stack and knocked them out and it’s a lot more wobbly now.

I think one, it’s easy to imagine that were there a movement where there was like a massive crush of activity, that that might actually overwhelm the site, which would take it back to its early days where there used to be the fail whale would show up all the time, and part of what people worked for many years to prevent is those sorts of internal failure. It might be that a crush of activity would actually let’s not be supported by the infrastructure. I think it’s really important to think about in some ways how he is exposed to authoritarian regimes.

Let’s imagine a movement in China and to what degree, because China’s a big customer for Tesla’s, would he have to be much more concerned about how that shows up? Of course, I don’t think Twitter’s available in China, but it’s like that doesn’t mean that discussion of Uyghurs on Twitter might not be subject to moderation or any of the other potential– Russia could potentially exert some influence on Musk to moderate content.

I worry that both at a technical level and at a degree to which, as Sarah noted, there’s one point of failure and that one point of failure is not somebody who seems to be a really independent actor. There’s every reason to worry that, going down the road, there will be essentially biased restrictions or technical restrictions on people’s ability to organize on Twitter.

[00:41:07] Sarah T. Roberts: Well, let’s just take the example that is in place right now, which is the extreme investment that the Saudis have made in the platform financially, in terms of making the purchase possible. These are folks who have had a disturbing track record in terms of dissent. They have these two cases that are known, placed individuals inside of Twitter who were revealing information about dissidents and outspoken critics of the Saudi regime, and they now own a considerable chunk of that investment. Just to put it bluntly, they own a lot of Musk.

This is worrisome to the point that the federal government has questions about that investment and its potential to harm the security of the United States. Those scenarios, they’re not even imagined or hypotheticals, they’re happening now and there’s not a clear indication that, again, Musk has the wherewithal to hold his own against those types of forces or even is interested in that. Whereas prior to the mass firings, there were many, many people who all worked for Twitter but did serve to a certain extent as a series of checks and balances on each other and they are all gone.

To Omar’s point about the potential for Twitter to serve different needs and to address people’s different needs because not everybody comes to Twitter for the same reason. By developing tools and by developing individualized experiences through the technology, I’m here to affirm that those kinds of ideas were very much on the roadmap. Guess what? All the people with the technical prowess, the product ability, the project management ability to lead, they’re gone.

[00:43:01] Patrick O’Keefe: I want to come back to this idea of this commingling of companies and his conflicts. Because when the Twitter sale was first announced, I made this serious but also very sarcastic point online, which was like, I can’t wait to see the policies Twitter puts in place that make Elon Musk companies subject to the same policies as everyone else. I know that wasn’t going to happen but I was like, I can’t wait to see what these policies are that’s going to be like, “Oh yes, he’s got conflicts but this is how we’re dealing with that,” because we’ve already seen these weird comminglings; Tesla engineers at Twitter HQ, which is just a public traded company working at a privately held, I don’t know, it’s some man who’s never been held to any consequences, I guess, in his life. How’s that work?

Then SpaceX just announced a big ad buy on Twitter, essentially moving money from one pocket to the other. We’re seeing that just with his companies that we know about that he’s CEO of. It’s his little fiefdom and it’s very odd. Then you get into, obviously, the conflicts with different countries internationally, who owns Twitter, who he owes money to, similar to Donald Trump that we referenced earlier.

[00:44:00] Ralph Spencer: Exactly.

[00:44:01] Patrick O’Keefe: It’s super uncomfortable. I think I want to come back to something Ralph highlighted, which is the content moderation council, which was immediately parodied on SNL. Okay. I wish they had had a content moderator for the sketch because I feel like it could have been funnier. It was funny to see content moderation on SNL and he has said something about it real quick. Right when he took over, he tweeted that he planned to form it and that it would have “widely diverse viewpoints.” That’s all that he said about it in the very full couple of weeks we’ve had here.

Just observations on that. Is this a pretext for making it look like thoughtful consideration was put into it when they unbanned Donald Trump? That it went before the council, that we thought about it, talked about it? What does it end up looking like? What do we expect from a content moderation council at Elon Musk’s Twitter?

[00:44:47] Ralph Spencer: You made the Trump comparison. First thing I can think of is Trump and the Republicans saying they’re going to get rid of Obamacare. We don’t know what we’re going to replace it with, but it’s going to be better. That’s what this has sounded like, “Oh, and all the best people.” He might have meant it when he said it but think about all the stuff he has going on around him. Now with the great big mess that Twitter has become, notice how you haven’t heard any more about it.

[00:45:12] Sarah T. Roberts: You know, you’ve got three people on with you. Let’s put it this way. We’re in our middle age. Let’s be genteel about it. We’ve been around the internet for– [crosstak]

[00:45:22] Patrick O’Keefe: I’m close.

[00:45:23] Sarah T. Roberts: Yes, right? I feel that I look youthful but I’m coming up on 30 years online next year. You know what–

[00:45:28] Ralph Spencer: I’ve already got to 30 years. [crosstalk]

[00:45:30] Sarah T. Roberts: Right? I know.

[00:45:31] Patrick O’Keefe: Everyone whip out their birth certificates.

[00:45:33] Sarah T. Roberts: It’s like this, if you think a social media content moderation council is a good idea, don’t you think that brain trusts on here might be able to tell you the story details of the successful content moderation councils that were in place on massive online sites over the years that worked so well? I really do think he thinks nobody else thought of the stuff that he’s thinking of. These are bad ideas that don’t work when evaluated six ways to Sunday and have been thrown on the discard pile. It’s not because everybody wants to be unfair, it’s because it doesn’t scale.

Again, I’m going to end with how I opened which is to challenge Elon Musk to go spend a day in one of the third-party outsourced content moderation companies that Twitter had, at least until recently, on its beck and call and sit down and spend a day doing that job. Then you tell us, sir, how well your content moderation council will work. I mean, you’re in a fantasy. Unless the council is Elon Musk in the morning, Elon Musk in the afternoon, and Elon Musk in the evening, it’s a non-starter, and it’s a non-starter that way too but so far, that’s about the long and the short of the idea that he seems to have is to be the arbiter. It’s never going to work.

[00:46:53] Omar Wasow: Yes. It struck me as just a kind of buying time that everybody expected him to bring Trump back and he was delaying that at least until after the election. More generally, and Sarah said this earlier, there’s this way in which he has two very different kinds of discourse. One is the letter that went out the day before he took over, which is, I want this to be a great place for advertisers and I want this to be the place where there’s lots of thoughtful discussion or whatever. Then I think it was not 48 hours later that as Ralph mentioned, he’s you know, posting a conspiracy theory about the attack on Pelosi’s husband.

It has this Dr. Jekyll and Mr. Hyde quality where he swings between saying something that seems at least reasonable and then behaving in ways that are wildly unreasonable. To Sarah’s point, it still may not be a good solution but it’s at least in practice, it sounds like something a corporate-speak CEO might say is like is that we’re going to do it right. I think that gets to something else, Sarah noted, which is, there’s some research in social science that as you become more powerful, as you become wealthier, it becomes harder to empathize with the average experience in the world. That shows up in interesting ways in psychology tests.

You just get a sense that he is so divorced from what the actual day-to-day operations of the site are, what the lived experience of it is. He’s obsessed with like bots and spam but why is that such a compulsion for him? Well, he has 100-plus million followers and when he looks at his replies, there’s probably a lot of bots and spam there. That’s not where I live because I’m a civilian. His perspective is distorted in a way partly by the investment around him but partly also by just being so way out of proportion to almost any other human on Earth, wealthy and in this case, powerful.

We were joking earlier about superpowers. It’s a kind of an anti-superpower, it’s a kryptonite for him. He actually has an inability to see, I think, in a very important way, how he’s failing and failing in a very public way. That’s, in some ways, a product I suspect, of the degree to which he just is looking at the world from these commanding heights and actually doesn’t understand how, in really plain terms, he’s embarrassing himself.

[00:49:19] Ralph Spencer: That’s just the thing, Omar. I have to question, do you really think he knows he’s failing?

[00:49:26] Omar Wasow: I don’t think he knows he’s failing. The behavior is so strange that to my mind, a type maybe there’s like he’s sleep deprived and he behaves in this chaotic way or there’s some other set of issues but it does not appear to me as somebody stable, fundamentally.

[00:49:42] Sarah T. Roberts: It doesn’t feel like a very stable genius, does it? [laughter] The behavior feels pathological. I’m not licensed in clinical psychology so I will cop to that right now but I am a human being in the world who’s been around people who seem to have personality disorders or other issues that cloud their judgment and make them unable to relate to others, and beyond the simple fact that he’s a person without equal financially and therefore completely isolated, there’s demonstration of other behaviors that are worrisome for someone with so much power.

For example, his idea of free speech on the site, this is where we drill down to brass tacks. What was it? He said, “Gosh, I’m such a benevolent guy. I’m going to let this kid who has the account that follows my jet around still post that even though it’s a great security risk to myself.” Meanwhile, there’s war zones in the world, the planet is being destroyed by things like private jets, the Amazon burns, there’s unrest, and he’s worrying about the free speech of that he’s going to allow with this bot account that follows his jet, but all of this aside, the bottom line is that most people ought to agree at this point that the existence of a person with this kind of wealth is a policy failure.

We’ve heard many people say that over the years, including some billionaires themselves. Why are we letting the product of a policy failure have this kind of impact and power on the rest of the world? That’s the question that I think ought to be at the very root of what we collectively ask ourselves. If we decide collectively that maybe this isn’t a great idea for humanity and for our day-to-day lives and those of others that we care about, what are we going to do about it? Because Elon Musk is one very powerful person but we as a collective also have a voice and we have the ability to rescind participation or take it elsewhere or resist or voice our dissent until such time that we can’t.

My question is, what are we going to do about this person who is failing publicly, who is a disaster for the planet and the rest of us? What gives him the right, at the end of the day, what gives him the right? When do we get to say, you know what? No more.

[00:51:55] Patrick O’Keefe: At the very least, don’t pay for Twitter Blue. Faced with many choices in this life, you’re a simple number in a database. If you pay for Twitter Blue, I know you love bookmarks, I know you love organizing your bookmarks– [crosstalk]

[00:52:04] Patrick O’Keefe: It’s all in Elon’s database. He just goes there and he says, this is a win. They paid $8.

[00:52:09] Sarah T. Roberts: Yes, well.

[00:52:10] Ralph Spencer: I got one thing to say about it. Think about these names, Prodigy, CompuServe, MySpace, Netscape, what happened to all of them? The way Elon Musk is going, looks like he’s trying to run Twitter, Twitter’s going to be on the same scrap heaps as those companies, and remember that they were pretty big in the industry at one point in time

[00:52:33] Patrick O’Keefe: He’ll still be here because he’s so wealthy.

[00:52:36] Ralph Spencer: Well, it’s like a friend of mine that I used to work with once said, the rich live very different lives than the rest of us.

[00:52:43] Omar Wasow: I think there’s a slightly different scenario, which is that he destroys a lot of value, echoing what Ralph said, but that then just also echoing what Ralph said, that he gets bored and that we’ve seen that he’s impulsive and at a certain point, this is going to stop feeling exciting and it’s probably already not fun. It seems entirely plausible to me that within a couple of years, it gets sold for half a 10th of what he paid, and potentially, not that the destruction particularly of the infrastructure is a good thing, but in some ways, the best possible outcome for something like Twitter would be to live at an entrepreneurial nonprofit that was larger and scaled more than most nonprofits.

It really is more like a news organization than it is like Facebook, and it would be nice if it had a genuinely benevolent overlord that saw it as this really important civic infrastructure, and so I think the only thing that gives me some hope is that Musk is doing so much damage that it might be possible to sell it in the future at a price that could take some of the pressure off of it to be some high growth, high-profit venture that it probably can’t be.

[00:54:02] Patrick O’Keefe: Well, I’m looking forward to Elon’s response to Sarah’s challenge, and if I happen to hear anything, I will definitely let you know, but that aside, this has been a real pleasure to discuss this whole Elon Musk thing with such a qualified panel. Omar, Ralph, Sarah, thank you so much for spending time with us today.

[00:54:21] Omar Wasow: Thank you.

[00:54:22] Ralph Spencer: Thank you, Patrick.

[00:54:24] Sarah T. Roberts: It’s been a pleasure and it’s been great to be with the esteemed folks that are here today, so thank you.

[00:54:31] Patrick O’Keefe: We’ve been talking with Omar Wasow, assistant professor in UC Berkeley’s Department of Political Science and co-founder of Black Planet, connect with him at omarwasow.com, Ralph Spencer, a trust and safety consultant formerly of AOL and Prodigy. You can find Ralph on LinkedIn, and we’ll include a link in our show notes, and Sarah Roberts, a professor at UCLA, and author of Behind the Screen. To learn more about Sarah’s work visit her website, illusionofvolition.com.

Community Signal is produced by Karn Broad, and Carol Benovic-Bradley is our editorial lead for this one last time. Thanks again for all your work, Carol, and thank you for listening.

If you have any thoughts on this episode that you’d like to share, please leave me a comment, send me an email or a tweet. If you enjoy the show, we would be so grateful if you spread the word and supported Community Signal on Patreon.